Lee Optimization Group

We pursue research in machine learning and optimization. To this end, we develop theories and algorithms using computational and mathematical tools. Our ultimate goal is to provide robust and provable solutions to challenging problems in artificial intelligence, particularly those in large-scale settings. We are passionate about translating our findings into practical applications that can benefit society.

Research focus

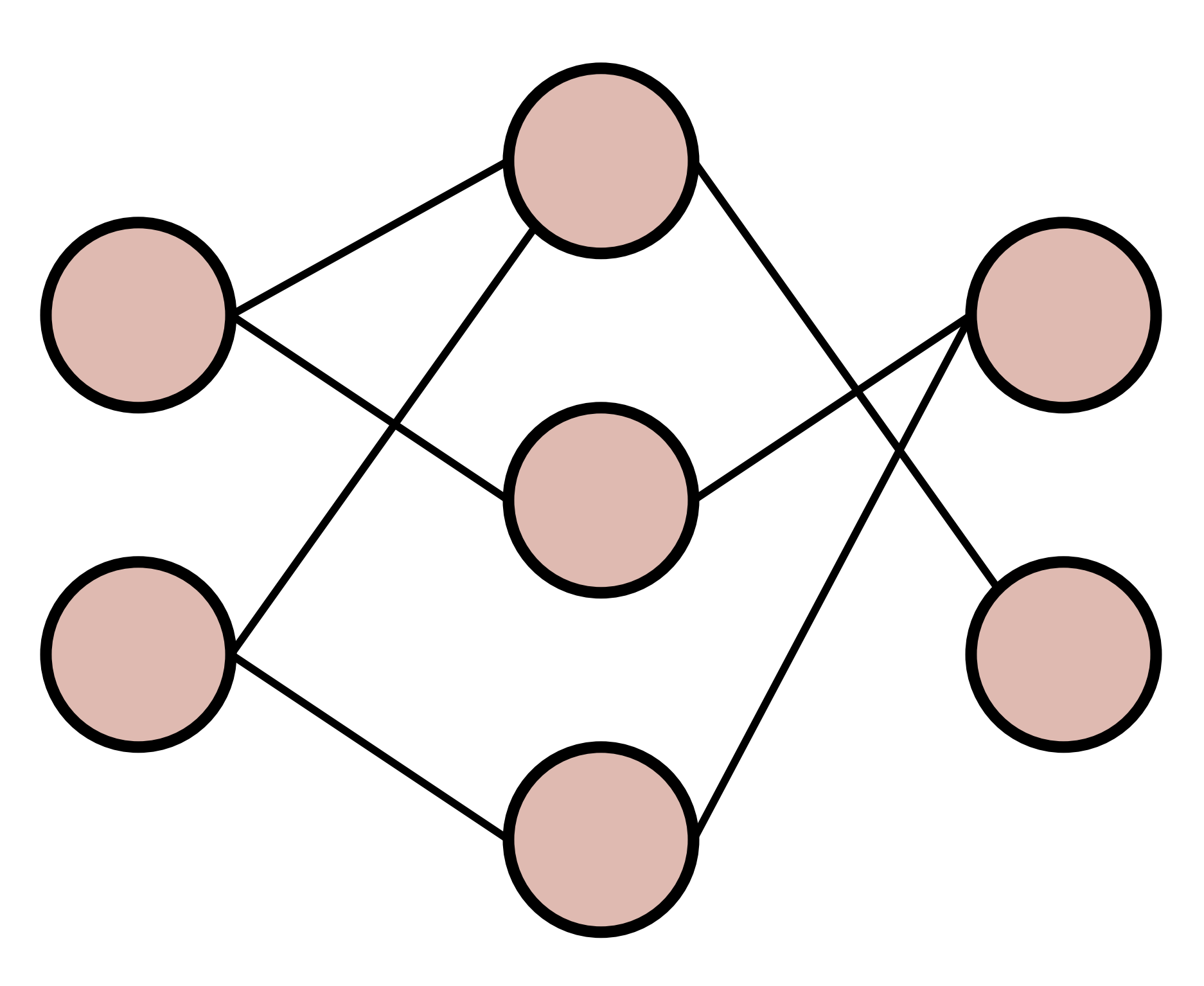

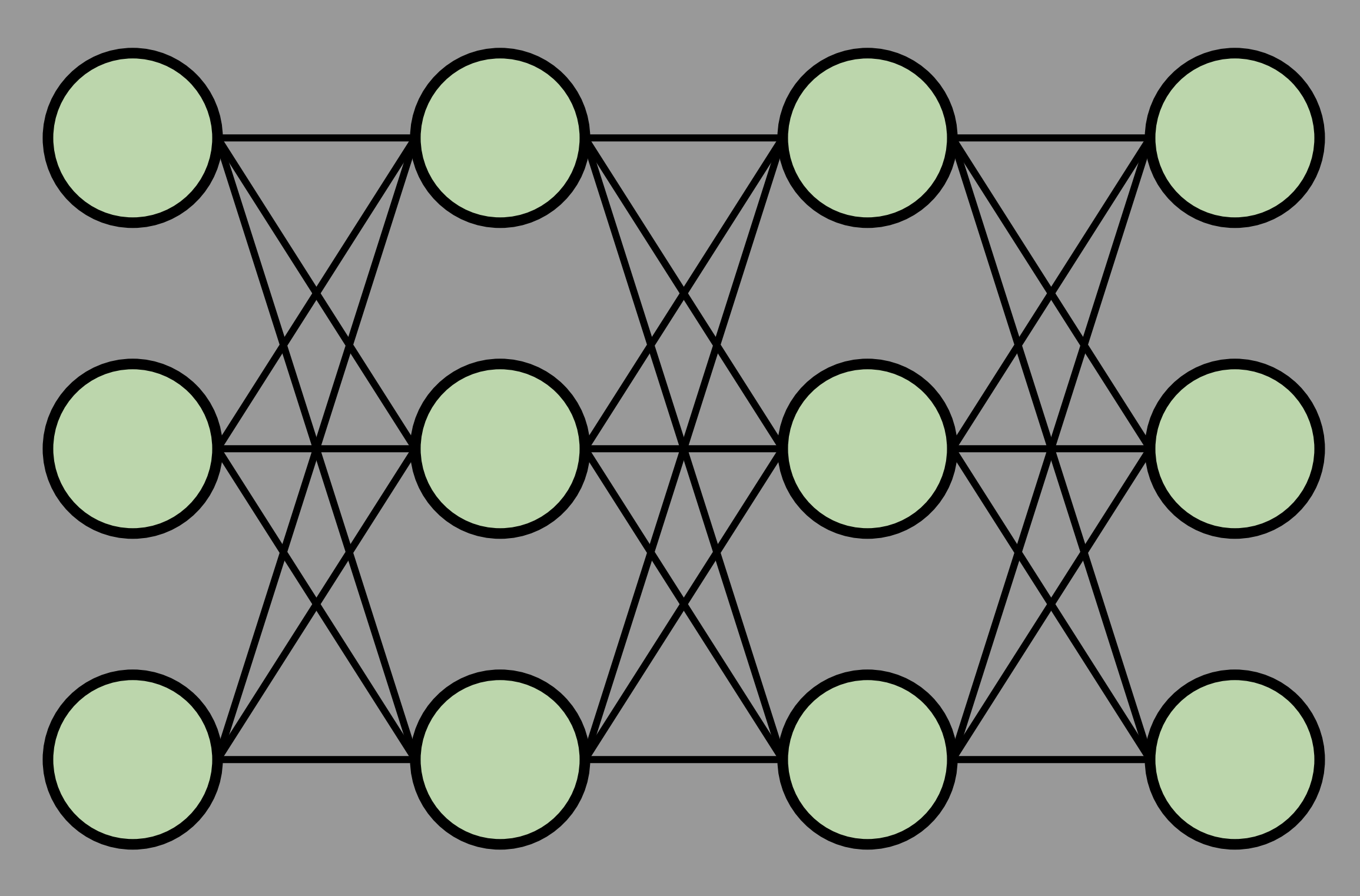

Optimization for Model Compression

As modern AI models, particularly deep neural networks, grow increasingly large and computationally demanding, model compression has become essential for enabling broader and more sustainable use of AI technologies.

We develop optimization methods to compress large models so that they can run more efficiently at training and inference without significantly compromising their performance.

Optimization for Model Compression

As modern AI models, particularly deep neural networks, grow increasingly large and computationally demanding, model compression has become essential for enabling broader and more sustainable use of AI technologies.

We develop optimization methods to compress large models so that they can run more efficiently at training and inference without significantly compromising their performance.

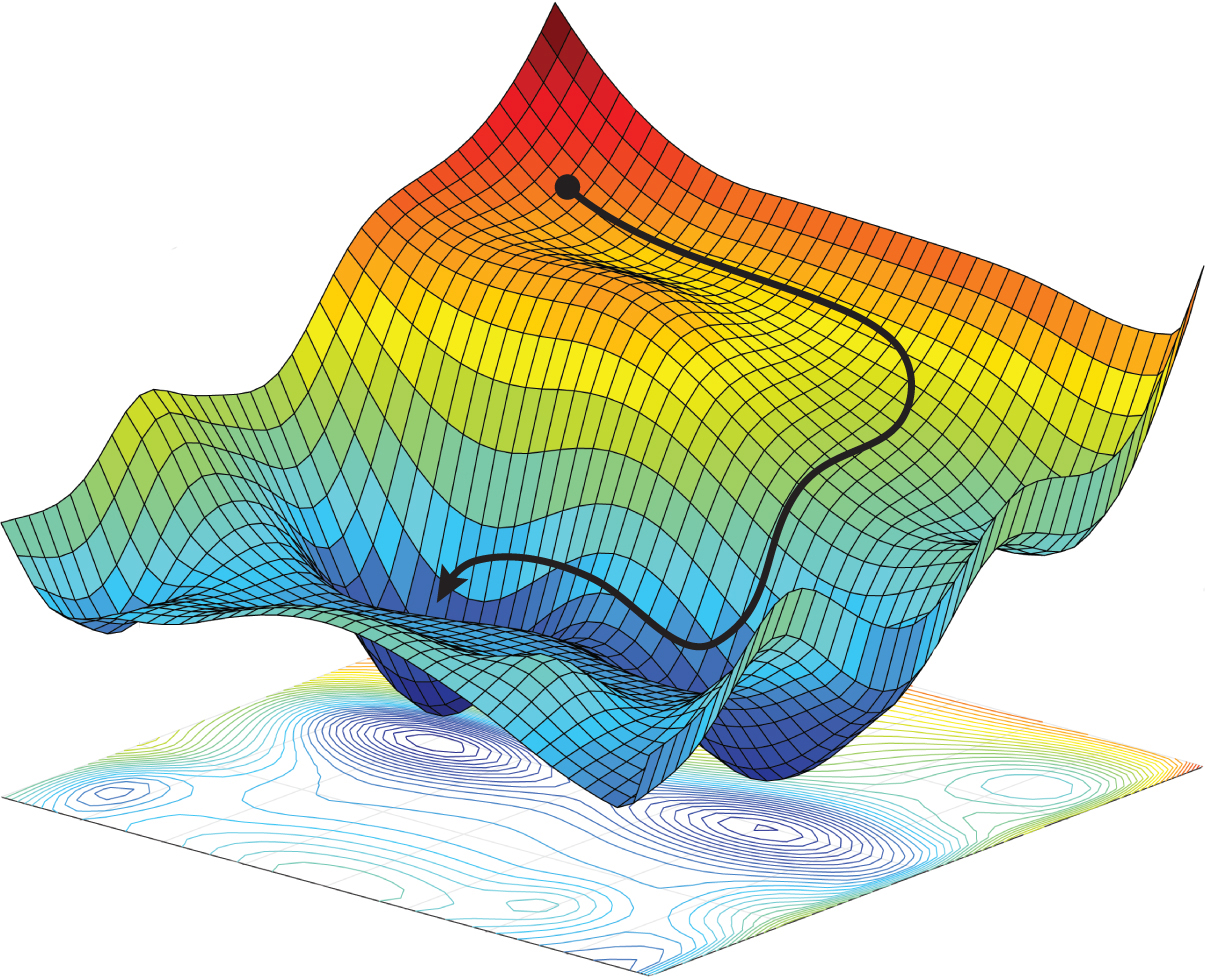

Optimization for Deep Learning

Optimization has been central to the success of deep learning, yet there remain many challenges to address such as non-convexity, high dimensionality, and scalability.

We study both theoretical foundations—such as convergence guarantees and generalization properties—and practical algorithms, including stochastic, adaptive, and second-order methods, so as to come up with efficient and principled optimization methods.

Optimization for Deep Learning

Optimization has been central to the success of deep learning, yet there remain many challenges to address such as non-convexity, high dimensionality, and scalability.

We study both theoretical foundations—such as convergence guarantees and generalization properties—and practical algorithms, including stochastic, adaptive, and second-order methods, so as to come up with efficient and principled optimization methods.

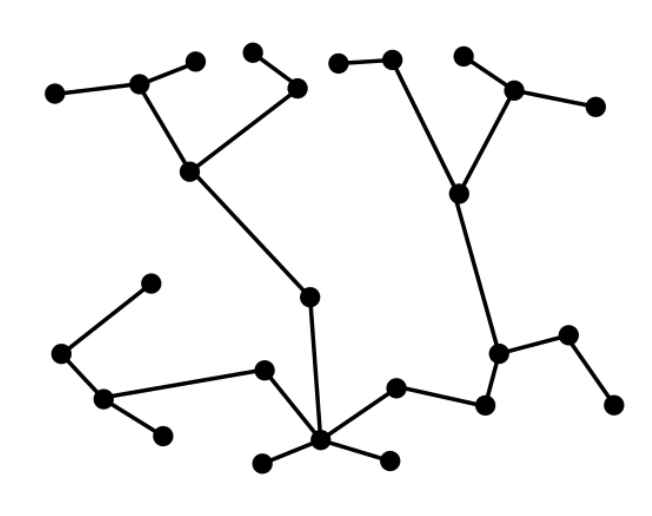

Optimization for Collaborative Learning

Collaborative machine learning is a promising learning paradigm that allows participants to work together to train a model without necessarily sharing their raw data.

However, frequent exchange of updates can overwhelm networks, especially with large models or slow connections.

We address such a unique set of challenges due to its distributed and often decentralized nature by developing efficient and scalable solutions.

Optimization for Collaborative Learning

Collaborative machine learning is a promising learning paradigm that allows participants to work together to train a model without necessarily sharing their raw data.

However, frequent exchange of updates can overwhelm networks, especially with large models or slow connections.

We address such a unique set of challenges due to its distributed and often decentralized nature by developing efficient and scalable solutions.

Optimization for Other Applications

Optimization is a versatile tool that can be applied to a wide range of machine learning applications.

We are currently focused on addressing challenges surrounding large language models, particularly those involving learning in black-box environments, continual adaptation, and multi-modal contexts.

Optimization for Other Applications

Optimization is a versatile tool that can be applied to a wide range of machine learning applications.

We are currently focused on addressing challenges surrounding large language models, particularly those involving learning in black-box environments, continual adaptation, and multi-modal contexts.

Members

Administrator

Dami So

Dami So

Graduate students

Jinseok Chung

Jinseok Chung

Donghyun Oh

Donghyun Oh

Jihun Kim

Jihun Kim

Hyunji Jung

Hyunji Jung

Jiyun Park

Jiyun Park

Doyoon Kim

Doyoon Kim

Alumni

Dahun Shin (MS 2025); Seonghwan Park (MS 2025); Jaehyeon Jeong (MS 2026)

Stories

We also spend time together on various occasions (see gallery for more).

Acknowledgements

Our research is generously supported by multiple organizations including government agencies (NRF, IITP), industry (Google, Samsung, Naver, Intel), and academic institutions (POSTECH, Yonsei).